Undergraduate research on speeding up data centers earns ACM first prize

The student’s project targets critical moments where the next instruction in a program is only available in a slower type of memory.

Enlarge

Enlarge

CS undergraduate Nathan Brown competed in the Association for Computing Machinery’s Student Research Competition and took first place among undergraduates. His project provides a means to speed up software applications by reading future program instructions from memory before they’re needed.

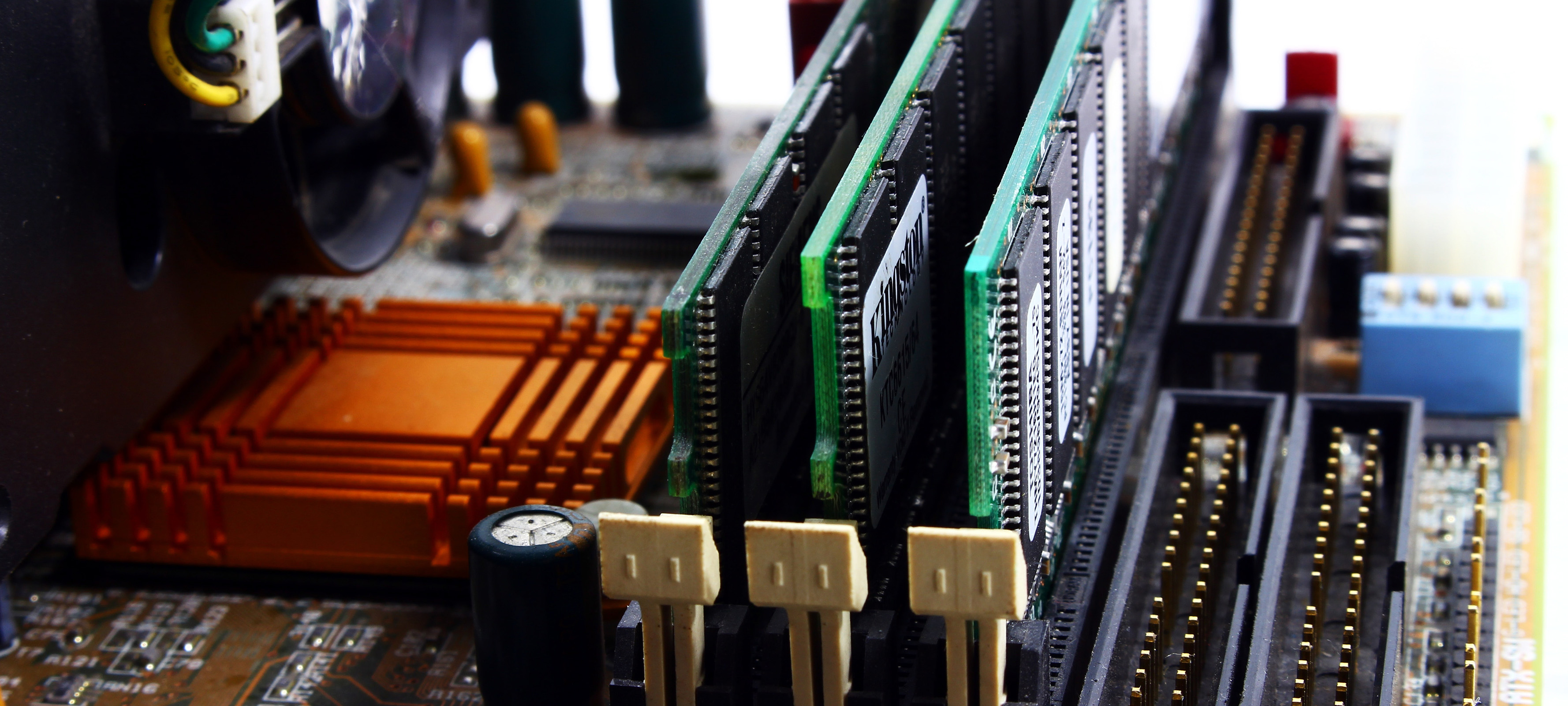

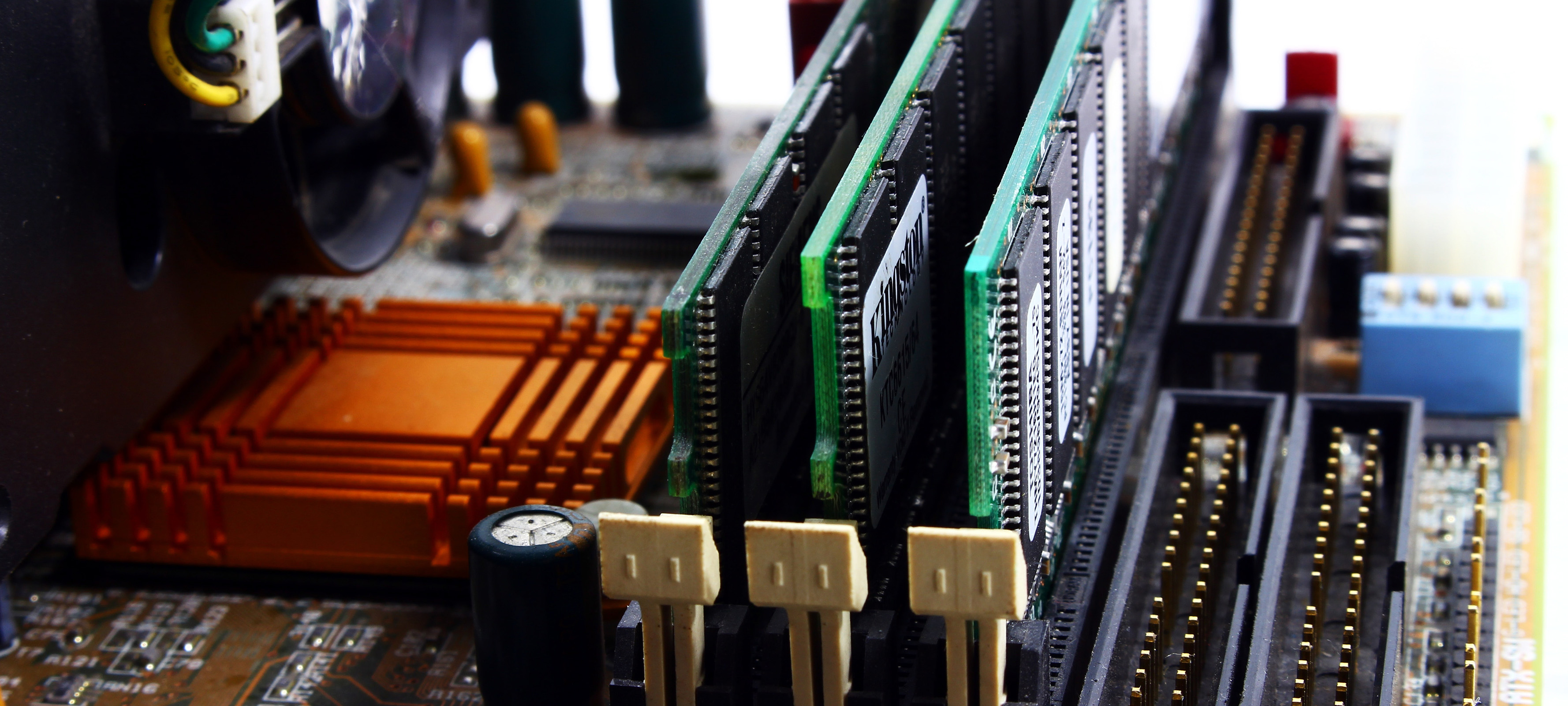

Brown’s work tackles a common problem in the basic computing pipeline – the speed differences between different types of memory. A program is just a sequence of small, simple instructions executed by the processor and read from an executable file on the hard drive, which moves a lot slower than the processor itself (a difference between nanoseconds and milliseconds). This is the reason for the multiple layers of memory above the disk, like RAM and caches. These get progressively smaller as their speed increases due to cost; at the top layer, caches are designed to only hold a small amount of data anticipated to be of use in the immediate future.

Because of this, the cache isn’t large enough to have the next instruction available when the program asks to skip ahead 1000 steps.

“In these cases, the request for an instruction has to go down the layers of memory to RAM or, in the worst case, the disk, causing the processor to stall,” Brown says.

These critical moments where the next needed instruction isn’t available in the cache are the focus of Brown’s project. The program wastes time pulling information from RAM that adds up over time.

“What we’re hoping to do is insert these special types of instructions that say: you might need instruction 13 in the near future, so start getting it from memory now so the wait is already over when you have to execute.”

These special commands are called pre-fetching instructions, and they have the potential to greatly speed up applications that put them to use. Their placement is determined by two pieces of output from the original application: the missed instructions themselves, and the branch history of the executable. This history allows Brown to reconstruct the path taken by the application and identify where it was in its execution when each miss occured. With this info and the latencies of the cache or RAM being used, he can then calculate how many instructions ahead the pre-fetch would need to be to effectively eliminate the processor’s waiting time.

This solution is especially effective in low-level or commonly-run applications, where the benefits of time saved can accumulate with repeated use.

“We’re targeting applications that run in data centers,” Brown says, “so they’re running almost all the time.”

Data center applications represent massive numbers of instructions, so much so that the effectiveness of caches is limited on these programs.

“Combine that with decent input control flow, and the software might be able to give some insight and say that 90% of the time when you see instruction X, instruction Y is coming 10 steps later,” Brown explains, “so you can pre-fetch it every time.”

Brown and his collaborators submitted an extended abstract for the ACM competition and plan to continue the work. They produced an early demonstration of the technique’s effectiveness with a small benchmark experiment, and found that they were able to increase performance by 0.43% after inserting only 2 of these prefetch instructions. Another metric they measured was Misses Per Kilo Instruction (MPKI), or how many instructions were not in the cache per 1,000 instructions. He was able to decrease this number by 13.5% with the same 2 prefetching instructions.

Brown worked on this project, titled “Profile-Guided Instruction Prefetching to Reduce Front-End Stalls of Datacenter Applications,” with assistance from CSE PhD student Tanvir Ahmed Khan and Prof. Baris Kasikci.

MENU

MENU