Test article

A multi-institution research team is working to make online collaborative tools more accessible to people with visual impairments. With a tool called CollabAlly, the team, led by Prof. Anhong Guo at the University of Michigan, has devised an interface to make visual cues on shared Google Docs compatible with screen readers and similar tools. The project received a Best Paper Honorable Mention at the 2022 Conference on Human Factors in Computing Systems (CHI22).

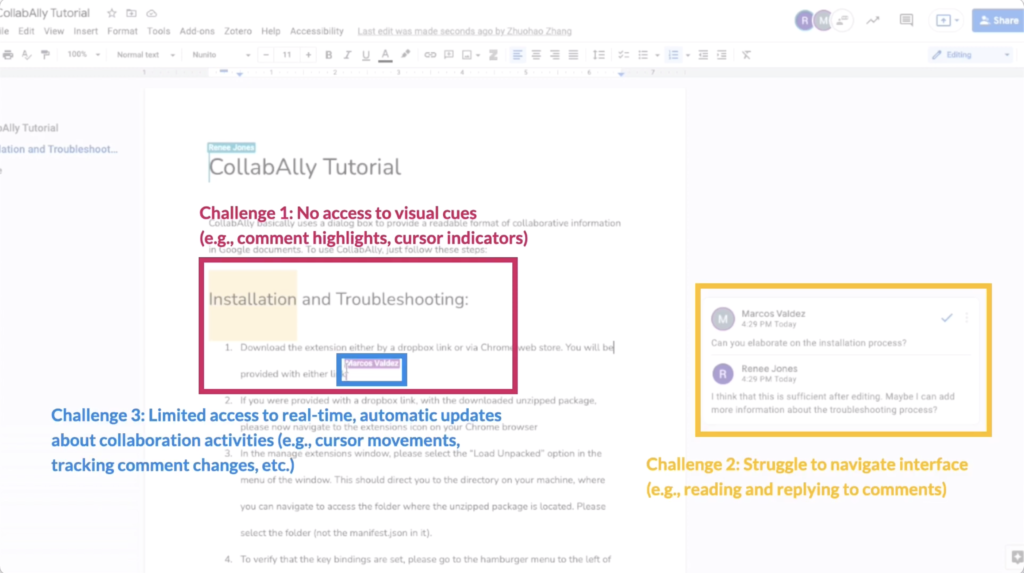

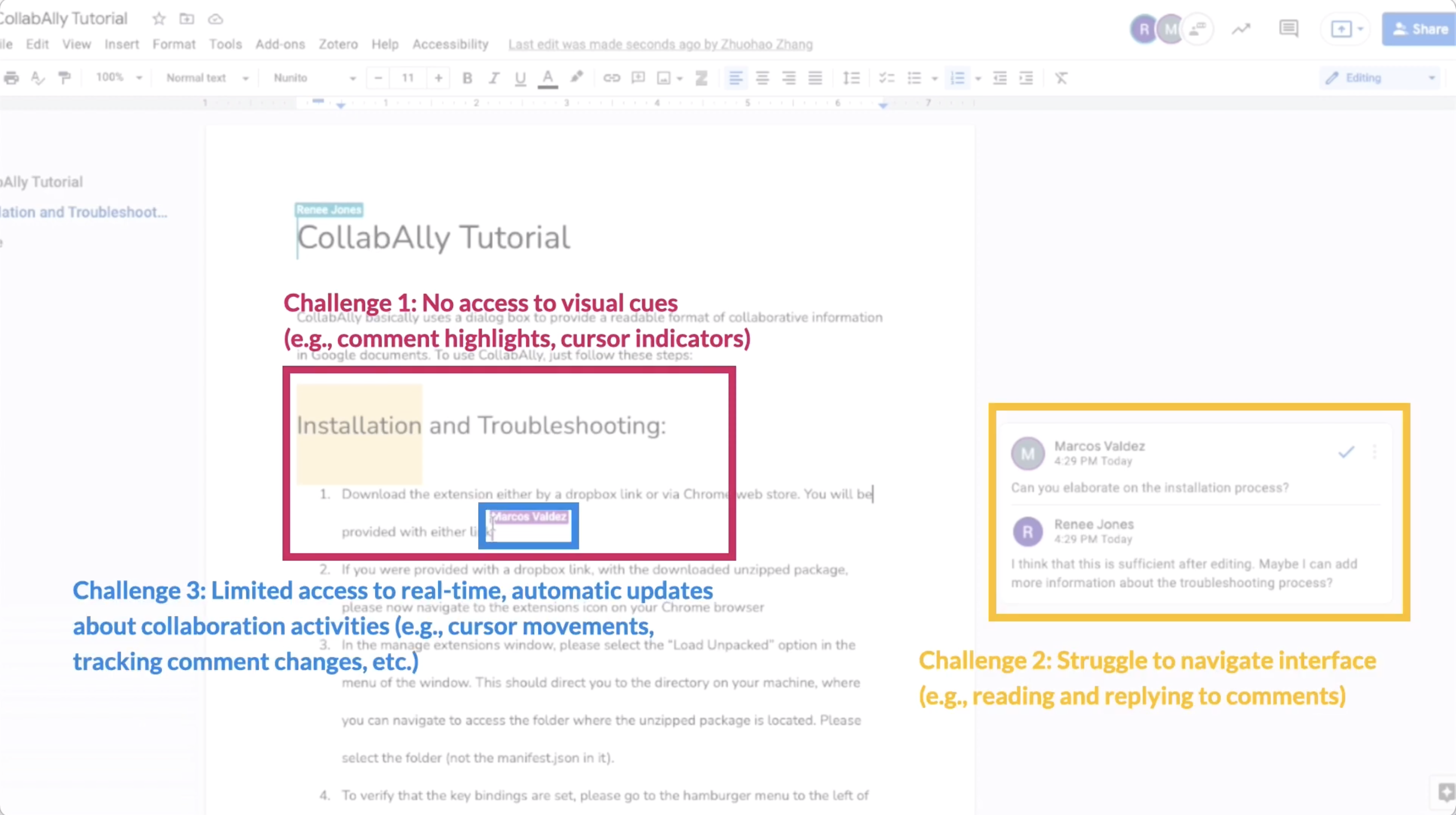

While the core editing capabilities of online word processors work with accessibility tools like screen readers, there’s currently no good way for blind users of these platforms to interact with their collaborative features. Google Docs, for instance, lists all collaborators currently viewing the document with just a profile image, adds suggestions and comments to the margin in a separate text box than the main document content, and indicates collaborator cursors and highlighting with different colors and popup names.

Enlarge

Enlarge

“Collaborative document editing is challenging for blind users,” says Zhuohao Zhang, a researcher at the University of Washington, in the project’s CHI 22 video presentation. “This is because they lack understanding of visual cues in a document, face navigation challenges, and do not have access to automatic, real-time updates about the collaboration environment.”

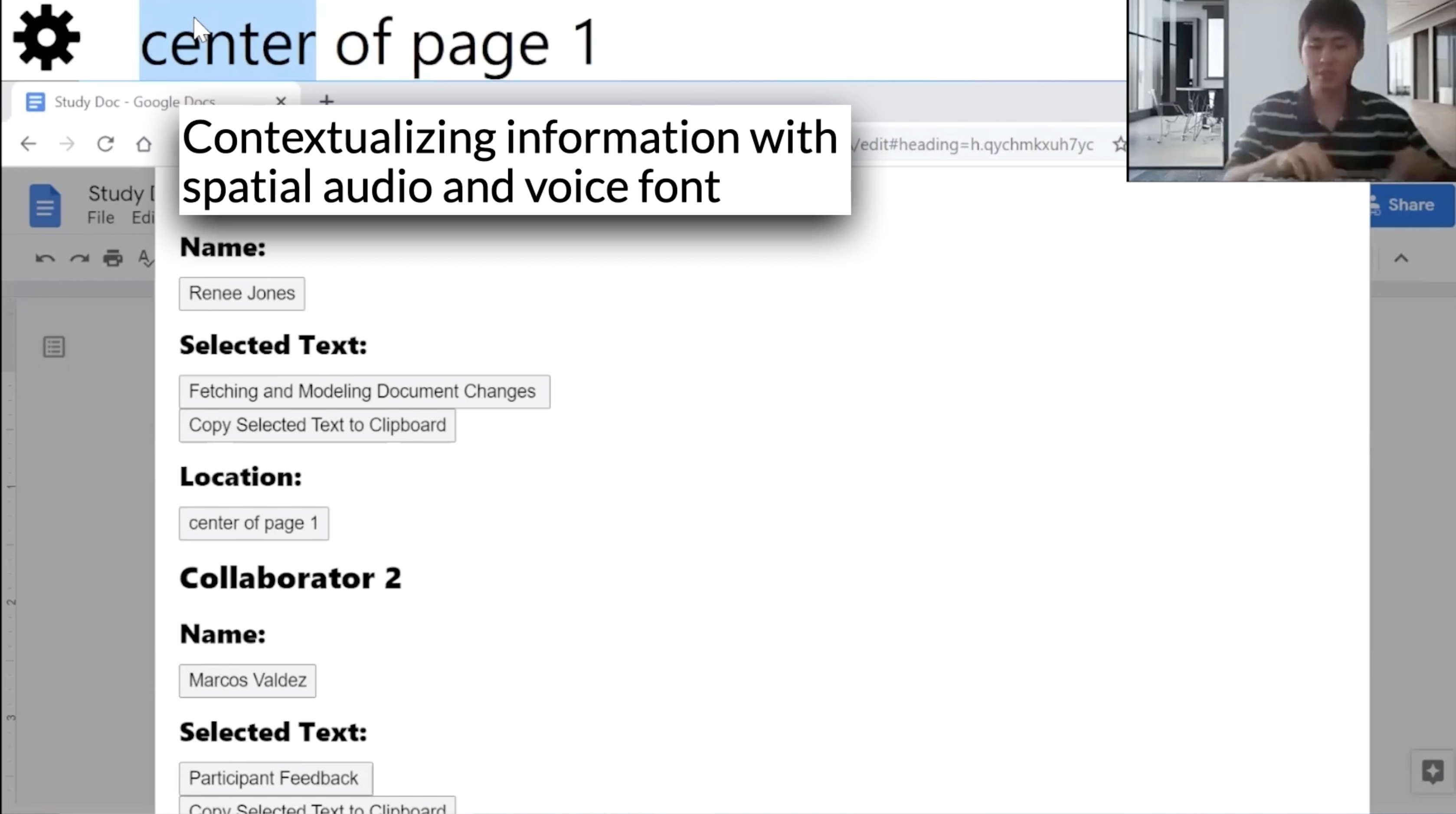

These collaborative environments “create a third dimension” that needs to be interpreted simultaneously, Guo says. With CollabAlly, which can be installed as a browser extension, the group intends to extract the collaborative processes going on in real-time, like collaborators viewing the document, comments, and changes, and provide audio representations of this information.

“CollabAlly will leverage accessible browser dialog popups, voice-guided interactions, and other enabling accessible interaction techniques to allow easy navigation for blind users to navigate through the document space,” says Guo.

Enlarge

Enlarge

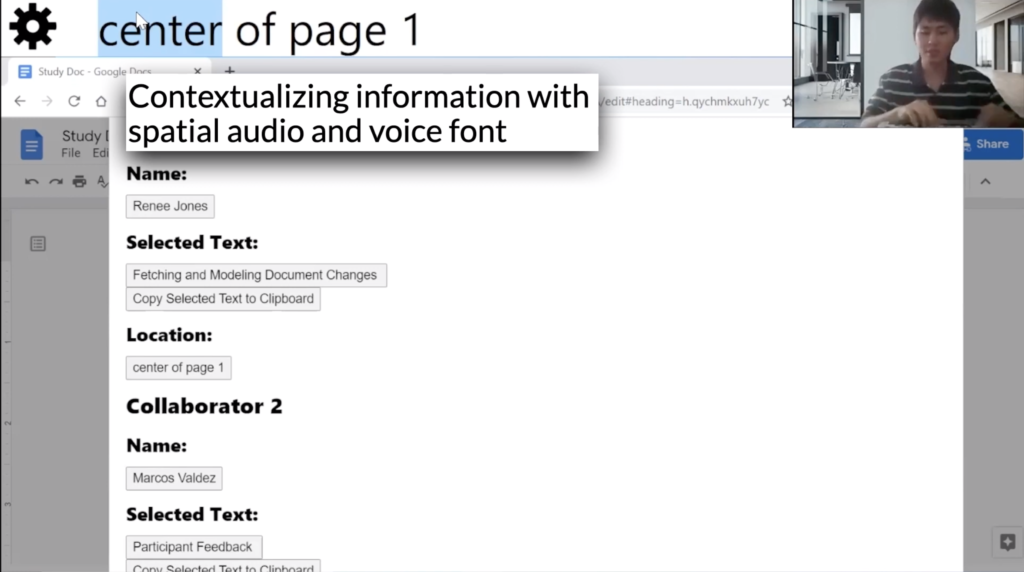

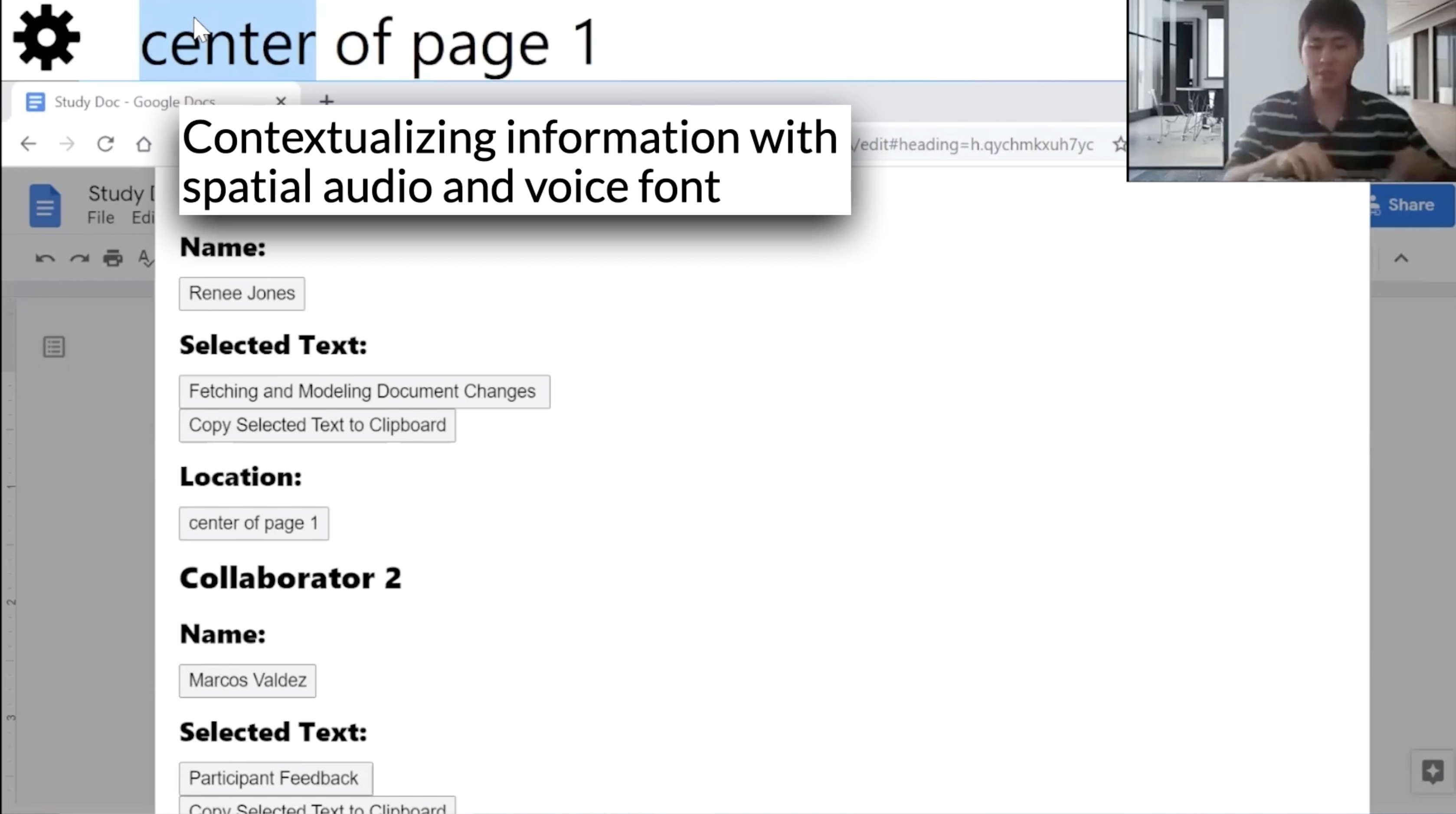

The extension was developed through a series of co-design sessions with assistant professor of information JooYoung Seo from the University of Illinois Urbana-Champaign, identifying the current practices and challenges in collaborative editing and iteratively addressing them. CollabAlly works by extracting collaborator, comment, and text-change information and their context from the document and presenting them in a dialog box to provide easy access and navigation for accessibility tools. The extension uses earcons, or brief audio cues, to communicate background changes in the environment, voice fonts to differentiate collaborators, and spatial audio to communicate the location of document activity.

In a study with 11 blind participants, the team demonstrated that CollabAlly provides improved access to collaboration awareness by centralizing the information formerly scattered across different visual cues, sonifying that visual information, and simplifying complex operations.

The paper, titled “CollabAlly: Accessible Collaboration Awareness in Document Editing,” was co-authored by Cheuk Yin Phipson Lee (Carnegie Mellon University), Zhuohao Zhang (University of Washington), Jaylin Herskovitz (U-M CSE), JooYoung Seo (University of Illinois Urbana-Champaign), and Anhong Guo.

Enlarge

Enlarge

MENU

MENU