SLAM-ming good hardware for drone navigation

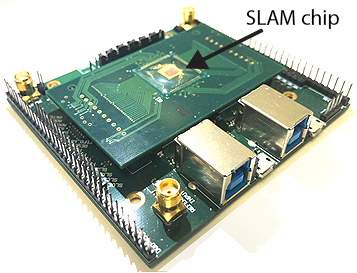

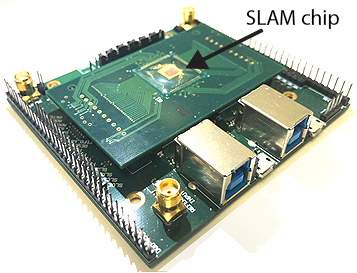

Researchers built the first visual SLAM processor on a single chip that provides highly accurate, low-power, and real-time results.

Enlarge

Enlarge

Researchers at the University of Michigan have developed an ultra-energy-efficient processor to help autonomous micro aerial vehicles navigate their environment. They were able to take advantage of highly effective deep learning algorithms to realize visual simultaneous localization and mapping (SLAM) technology in a single chip measuring a little more than a centimeter.

The goal of SLAM is to provide a continual estimate of where a moving, autonomous device is located is located along its posture within its environment, both vertically and horizontally, while also constructing a 3D map as the robot navigates its environment. SLAM, which is also used extensively in augmented/virtual reality applications, can use a variety of sensing and location techniques such as lidar, GPS, and cameras.

Visual SLAM relies solely on cameras, and is the preferred method for autonomous aerial vehicles. However, cameras require extensive processing, which increases the amount of power needed and the overall weight for the battery. That added weight is incompatible with micro aerial vehicles – some of which can be mere centimeters.

Enlarge

Enlarge

A team of faculty and students, led by Professors Hun-Seok Kim, David Blaauw, and Dennis Sylvester, have created the first visual SLAM processor on a single chip that provides highly accurate, low-power, and real-time results.

“Our processor can be integrated into the camera so that as the camera moves, the processor continuously computes its location and posture, while accurately mapping the surrounding area,” said Ziyun Li, a doctoral student working on the project.

The researchers were able to do this using convolutional neural networks (CNNs), a deep learning algorithm that has proven to be highly successful in image analysis. However, since CNNs require significant resources for computation and memory, they first needed to develop hardware that could support a CNN while flying onboard a drone.

Similar systems developed by others had resulted in an incomplete SLAM system, or one that was limited in quality and range. A more recent attempt required an off-chip high-precision inertial measurement unit to simplify the complexity that comes with using CNN, which increased both the cost and the power.

“We implemented a single chip system,” said Li, “that allowed us to use the computation-heavy and accurate CNN algorithm to identify features.” The system also accounted for drift (i.e., small errors that accumulate when mapping a path), resulting in a highly accurate map of the surrounding area.

The system was tested on the industrial standard KITTI benchmark, which is often used to compare the vision systems used in autonomous vehicles. It achieved 97.9% accuracy in translation and 99.34% in rotation on KITTI rendering automotive scenes over 1km. The 10.92mm2 chip consumed 243.6mW to process 80fps VGA at 3.6TOPS/W, marking a 15x improvement in performance and 1.44x in energy efficiency over existing systems.

Future applications of the technology include facilitating human computer interactions, such as virtual reality scenarios where changing the position of your head signals a specific intention.

The research is described in the paper, An 879GOPS 243mW 80fps VGA Fully Visual CNN-SLAM Processor for Wide-Range Autonomous Exploration, by Ziyun Li, Yu Chen, Luyao Gong, Lu Liu, Dennis Sylvester, David Blaauw, and Hun-Seok Kim.

MENU

MENU