Multi-institute project “Treehouse” aims to enable sustainable cloud computing

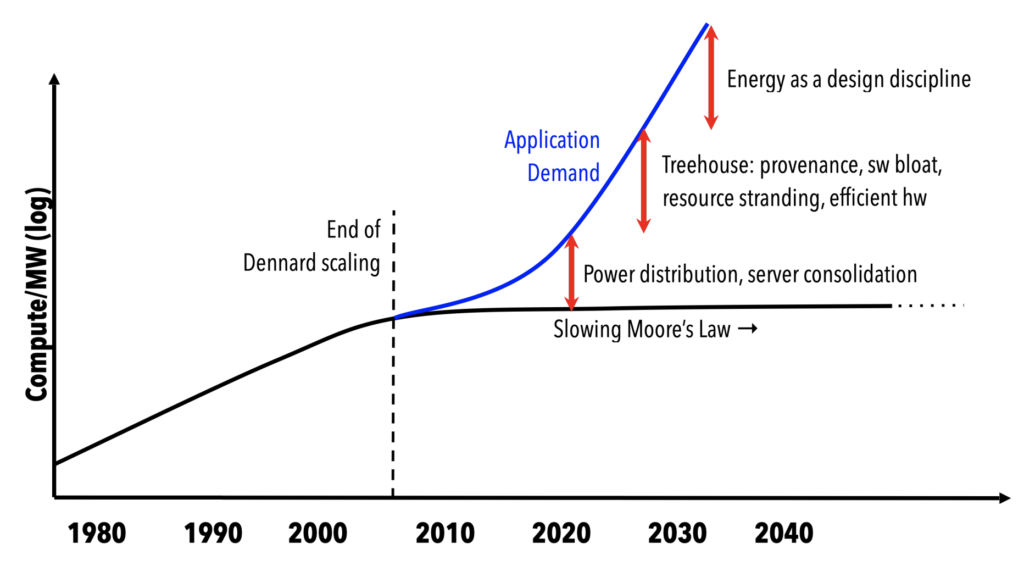

Computing requires energy, and as applications explode in size centralized datacenters grow to match them. But unlike resources like computing power and memory, energy consumption is often downplayed as a limiting factor in datacenter expansion. At this point, datacenters already consume an estimated 1-2% of worldwide electricity production. This figure shows no sign of slowing, raising alarms in light of an ongoing energy and climate crisis.

A team of researchers from five institutions, including Prof. Mosharaf Chowdhury from U-M, is working to make energy a first-class resource in cloud software systems, putting it on equal footing with factors like computing power, memory, and cost. Their project, called Treehouse, aims to provide a suite of resources to better monitor datacenter energy consumption and mitigate waste from a software perspective. The team released their first whitepaper, “Treehouse: A Case For Carbon-Aware Datacenter Software,” on arXiv on January 7.

Making the most of datacenter resources is already a balancing act, and adding energy-conscious design to the mix poses a number of business challenges. A priority for software developers making use of cloud resources is improving their application’s performance, which often doesn’t map easily to a better carbon footprint.

“One of our first tasks is measuring and understanding the trade off between energy used and performance gained,” says Chowdhury. “We want to answer questions like, is it possible to save a lot of energy for running something only slightly slower?”

These measurements will do a great deal to improve developers’ understanding of their software’s carbon footprint. For most users of cloud resources, the energy consumption of their applications is completely opaque. Additional measurements will be taken to determine a datacenter’s energy source at different times of operation.

“Datacenter power sources change over time – sometimes they draw from clean energy sources, and sometimes not,” Chowdhury says. “We want to determine if you can delay when your application will run so that it can use a cleaner source.”

The Treehouse project aims to deliver these metrics directly to software developers. The team’s objective is to give developers a real-time look at how changes to their application impact their energy footprint, allowing “energy debugging” similar to existing processes for improving performance.

“We want developers to be able to compare performance and energy tradeoffs,” says Chowdhury, “and understand and reduce their individual carbon use from cloud services.”

The team proposes to share what they call an application’s “energy provenance” with developers through a supervised machine learning model that can produce this measurement based on the application’s expected resource usage. The input to the model will be metrics that the team says are easily measured in software, including the network bandwidth, bytes of storage and storage bandwidth, and accelerator cycles, as well as the type and topology of hardware the application runs on.

In addition to making an application’s energy use visible to developers in real time, they aim to devise a way for them to convey their tolerance or desire for energy-saving optimizations to the datacenter system. This added user preference can give the datacenter more flexibility in how it allocates its resources, as opposed to assuming maximum performance for every request.

The team is also investigating how to allocate datacenter resources on a more fine-grained timescale. Currently, despite the fact that many applications have dynamic resource use over the course of their execution, cloud applications are often provided with their peak expected resources. To this end, related work by Chowdhury on resource disaggregation will be put to use. This technique makes use of high-speed networking to link memory or computing resources scattered throughout the datacenter together, effectively transforming the whole center into one modular machine for more efficient resource allocation.

“Currently half the memory in a datacenter sits idle, but spending energy nonetheless,” Chowdhury says. “By packing more jobs into the unused memory, you end up with more efficient datacenter execution.”

This effort represents one of the first software-focused studies in sustainability, an approach the authors say is underlooked despite the huge footprint of distributed cloud applications.

“Of course, there are a lot of physical aspects to sustainability,” Chowdhury explains, “but a big chunk of energy, especially with the rise of AI and machine learning training, is going inside the data center. We are buying thousands of GPUs and running them at full speed, and no one really knows just how much energy is being spent in the process.”

The Treehouse project is led by Thomas Anderson (University of Washington), Adam Belay (MIT), Mosharaf Chowdhury, Asaf Cidon (Columbia University), and Irene Zhang (Microsoft Research). The project is supported by a $3M NSF-VMware joint grant in the Foundations of Clean and Balanced Datacenters program.

MENU

MENU